Cae

FastAPI backend with MCTS-based engine to optimize LLM conversation responses

A production-ready FastAPI backend and MCP server that applies Monte Carlo Tree Search (MCTS) to generate, simulate, and score multiple conversation branches to select optimal LLM responses. Includes async processing, Redis caching, PostgreSQL/PGVector storage, Prometheus metrics, and Docker Compose deployment. Suited for building advanced LLM applications that require conversation analysis, response optimization, and MCP-compatible integrations.

Features

MCTS Conversation Analysis

Multi-Branch Response Generation

Conversation Simulation

Goal-Oriented Scoring

Semantic Caching

Prometheus Metrics

Pricing

Free plan available

0

FAQs

Reviews (0)

Review boilerplateSimilar Boilerplates

SaaS Rock

👨🏻💻 by Alexandro Martínez Paid

Quick start your MVP with out-of-the-box SaaS features like Authentication, Pricing & Subscriptions, Admin & App portals, Entity Builder (CRUD, API, Webhooks, Permissions, Logs...), Blogging, CRM, Email Marketing, Page Block Builder, Notifications, Onboarding, Feature Flags, Prompt Flow Builder, and more. Don't reinvent the wheel, again.

Makerkit

👨🏻💻 by Giancarlo Buomprisco Paid

Build unlimited SaaS products with any SaaS Starter Kit.

Save months of work and focus on building a profitable business.

Larafast

👨🏻💻 by Sergey Karakhanyan Paid

Launch your app in days with Laravel Starter Kit Laravel SaaS Starter Kit with ready-to-use features for Payments, Auth, Admin, Blog, SEO and more...

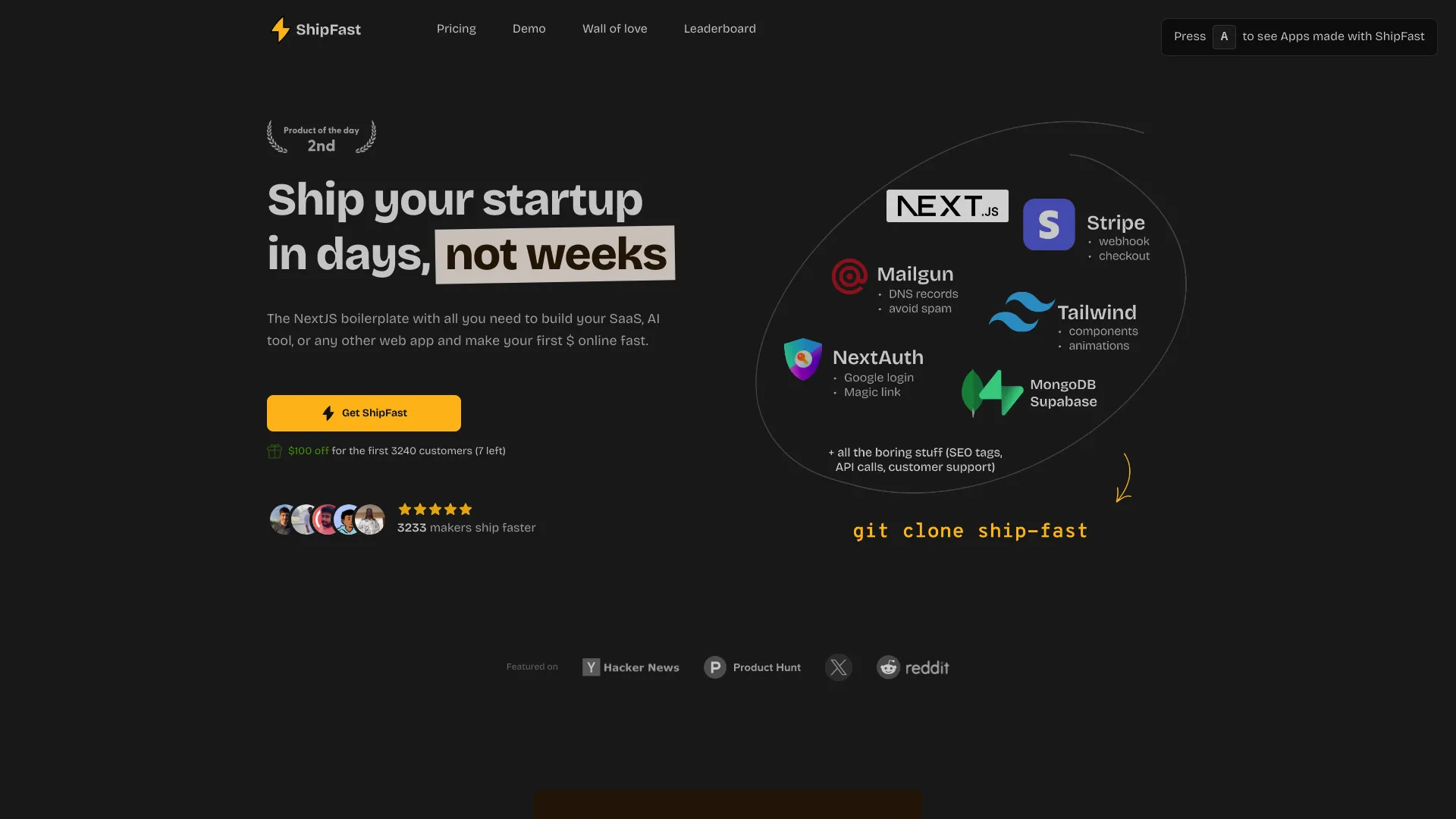

Shipped

👨🏻💻 by Luca Restagno Paid

Launch your startup in days, not months. The Next.js Startup Boilerplate for busy founders, with all you need to build and launch your startup soon.

Shipixen

👨🏻💻 by Dan Mindru Paid

Go from nothing → deployed Next.js codebase without ever touching config. Ship a beautifully designed Blog, Landing Page, SaaS, Waitlist or anything in between. Today.